面向可重构结构的CNN模型混合压缩方法

打开文本图片集

DOI:10.16652/j.issn.1004-373x.2026.01.026 引用格式:,,,等.面向可重构结构的CNN模型混合压缩方法[J].现代电子技术,2026,49(1):167-173.

Hybrid compression method for CNNtargeting reconfigurablearchitectures

LIUPengfei¹,JIANGLin²,LIYuancheng²,WUHai³ (1.CollegeofElectricalandControlEnginering,Xi'an UniversityofScienceand Technology,Xi’an7o6oo,China; 2.CollgeofScienceandTechnology,Xi'anUniversityofScienceandTechnology,Xi'an71O6oo,China; 3.CollegeofCommunicationandInformationTechnology,Xi'anUnversityfSienceandTechnology,Xi’an6oChina)

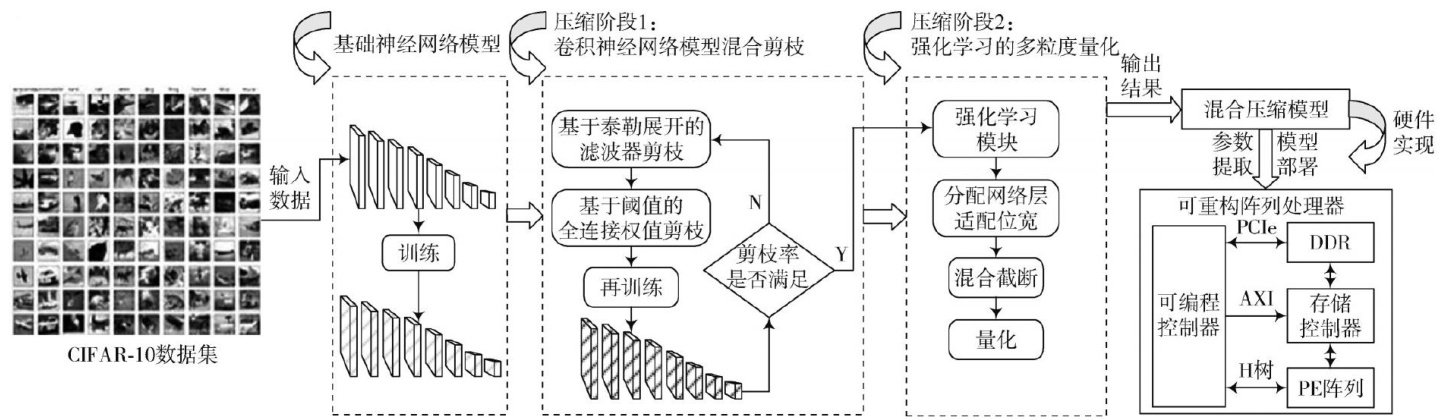

Abstract:Asthescaleofconvolutionalneural network(CNN)continues toexpand,thenumberof parametersand computationburdenhaveincreasedsignificantly,whichleadstoseverememoryaccessbotlenecksandlimitscomputational effciency.Inviewofthis,anovelhyridcompressionmethodforCNNtargetingreconfigurablerchitecturesisproposedInthis method,apruning-tquantizationsategymployigfilterpruningbasedonfistorderayloexpansion,fulloedlar (FClayer)weightpruning basedonthresholdvalues,andmixed-precisionadaptivequantizationaproach,isproposed.These techniqusstrive toreducetheparameterquantityandcomputationalcomplexityof themodel,andthemethodisdeployedona self-developedreconfigurableprocessor.Experimentalresultsdemonstratethattheproposedmethodachievescompressonratios of 31.4 timesand7.9 times ontheVGG16 modeland ResNet18 model,respectively,withaccuracy lossesof only 1.20% and (20 0.74% ,respectively.On thereconfigurable arrayprocessorbuiltonthe VirtexUltraScale VU440 FPGA,theexecutioncycleof the compressed VGG16 model is reduced by up to 62.7% .It is proved that the proposed method is suitable for edge computing devices with limited resources.

Keywords:CNN;model compresion;structured pruning;adaptivequantization;;paralel computing;;reconfigurable architecture

0 引言

近年来,卷积神经网络(ConvolutionalNeuralNetwork,CNN)在各个领域取得重大进展,包括图像分类、目标检测2与自然语言处理3等。(剩余10204字)