基于大模型知识蒸馏的代码摘要自动生成

打开文本图片集

引用格式:,,,等.基于大模型知识蒸馏的代码摘要自动生成[J].指挥控制与仿真,2025,47(4):27-33.YOU G,LIUWJ,etalodemmaaedrgeodelkolegedistilionJmandorol&iulatio,47 (4) :27-33.

中图分类号:TP311;TP18 文献标志码:A DOI:10.3969/j.issn.1673-3819.2025.04.005

Code summarization based on large model knowledge distillation YOU Gang,LIU Wenjie,LI Meipeng,SUN Liqun,WANG Lian, TIAN Tieku (Unit 96941 of PLA,Beijing ,China)

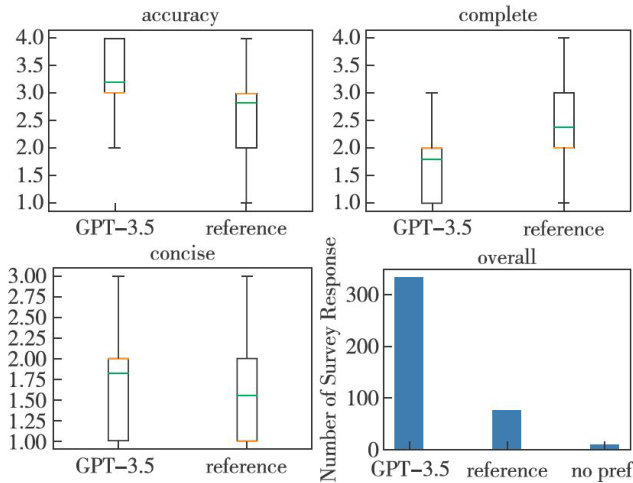

Abstract:Code summarization is a short natural language description ofsource code.Summariesareusuallyonlyone sentencelong,buttheyaretheprimarywayfordevelopers tounderstandcode.Recently,productsbasedonlargelanguagemodels(such as ChatGPT)havedemonstratedastrong abilitytogenerate these descriptions.However,touse these tools,programmersmustsend theircodetoanuntrustedthirdpartyforprocessing(forexample,throughAPIcalls),butthismethod is unacceptable to many organizations.This paper presents an alternative:weuse the exampleoutput generated by GPT-3.5 totrainanopensourcemodel throughaprocessrelatedtoknowledgedistilation.Enablingsmall models(with 350millon parameters) to also be comparable to GPT-3.5 in code summarization tasks.

Keywords: code summarization;large model;knowledge distillation

代码摘要是源代码的简短自然语言描述。(剩余15134字)