语义分割中的自适应通道特征蒸馏

打开文本图片集

DOI:10.3969/j.issn.1671-7775.2025.05.009

关键词:语义分割;知识蒸馏;模型轻量化;自注意力机制;量纲一化;相关性矩阵中图分类号:TP391 文献标志码:A 文章编号:1671-7775(2025)05-0556-0

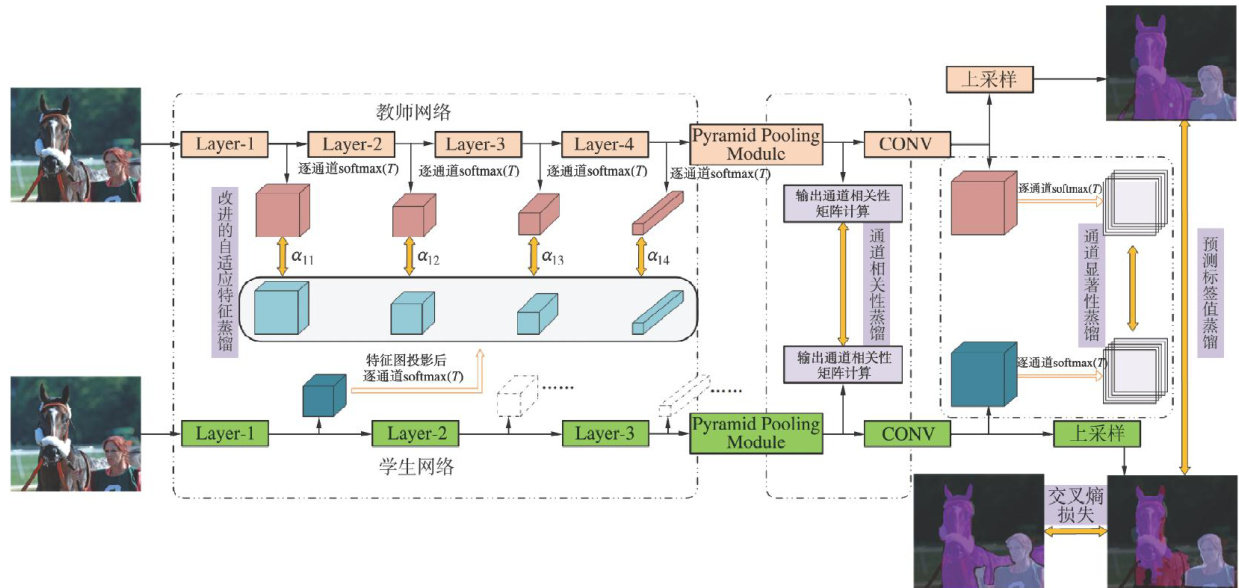

Abstract:In the traditional knowledge distilation methods,the semantic segmentation tasks were not optimized,and thespatial-level feature alignment was primarily focused on with the problemsof limitations in teacher-student architecture constraints and redundant knowledge transfer.To address these issues,the channel-wise features from backbone,feature enhancement layers and prediction outputs were utilized with incorporating self-attention mechanisms,softmax normalization and correlation matrices. The adaptive channel-wise feature distillation framework was developed with incorporating three key components of adaptive feature distillation for backbone layers,channel correlation distillation for fusion layersandchannel significance distillation for label layers.To verify theframework,thesystematic experiments were conducted on the PSPNet semantic segmentation network.The results show that by the proposed method,the segmentation accuracy is improved by 5.79% with maintaining the inference efficiency of PSPNet-ResNet18 student model.The framework can support the heterogeneous backbone architecturesbetween teacher and studentmodels.

Key words:semantic segmentation;knowledge distilation;model light weighting;self-attention mechanism;normalization;correlation matrix

随着深度学习技术的快速发展,语义分割等视觉任务的精度随着模型架构的改进而不断提升,但也随之带来了模型参数量与计算量的成倍提高,使其在无人机、机器人等嵌入式低功耗设备上无法有效进行部署与应用.因此,迫切需要研究一种在维持模型精度的同时还能有效降低计算量与参数量的方法.

当前针对模型压缩的研究主要分为以下几个方向: ① 模型剪枝通过设计一种评价准则来判断模型中参数的重要程度,再根据得到的重要性对模型参数进行删减; ② 模型量化通过将网络存储的32位浮点权重量化到低位浮点数、整型或者二进制数,借此降低模型的存储开销并加快模型的推理速度; ③ 轻量化网络设计根据特殊卷积层、网络结构的属性直接设计轻量化网络,如采用深度可分离卷积的MobileNet系列2]、采用分组卷积和通道重排的 ShuffleNet系列[3-4]、利用简单线性操作获得更多特征图的GhostNet网络[5].金汝宁等[6]基于轻量化网络设计思想,提出G-lite-DeepLabv 3+ 网络架构,采用MobileNetV2替代Xception特征提取网络,在MobileNetV2和ASPP模块中引人分组卷积,并选择性移除批量的量纲一化层,实现在保持精度的同时显著降低参数量的目标。(剩余7850字)