多模态特征融合的RGB-T目标跟踪网络

打开文本图片集

RGB-T tracking network based on multi-modal feature fusion

JIN Jing,LIUJianqin*,ZHAIFengwen

(School ofElectronic and Information Engineering,Lanzhou Jiaotong University, Lanzhou730070,China) * Corresponding author,E-mail:1970477938@qq. com

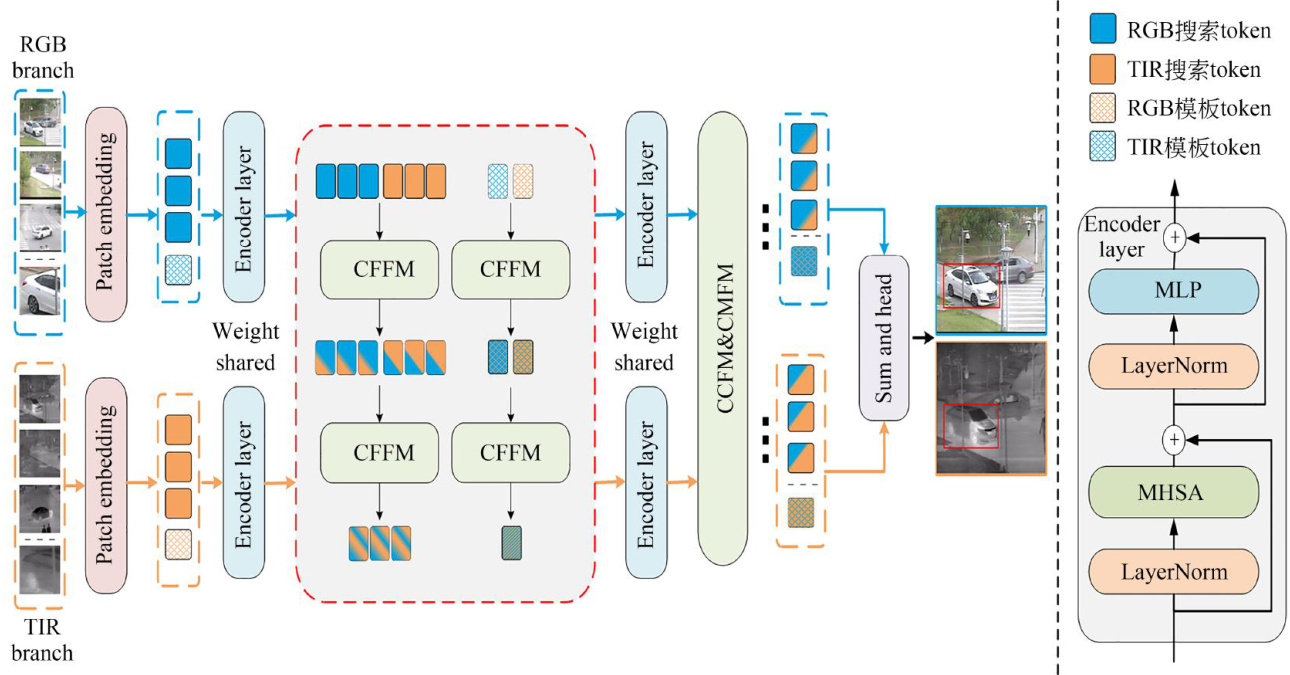

Abstract: In recent years,RGB-T tracking methods have been widely used in visual tracking tasks due to the complementarity of visible image and thermal infrared images. However,the existing RGB-T moving target tracking methods have not yet made fulluse of the complementary information between the two modalities,which limits the performance of the tracker. The existing Transformer-based RGB-T tracking algorithms are stillshort of direct interaction between the two modalities,which limits the full use of the original semantic information of RGB and TIR modalities. To solve this problem,the paper proposed a Multi-modal Feature Fusion Tracking Network for RGB-T(MMFFTN). Firstly,after extracting the preliminary features from the backbone network,the Channel Feature Fusion Module(CFFM) was introduced to realize the direct interaction and fusion of RGB and TIR channel features. Secondly,in order to solve the problem of unsatisfactory fusion effect caused by the diference between RGB and TIR modality, a Cross-Modal Feature Fusion Module(CMFM) was designed and the global features of RGB and TIR were further fused through an adaptive fusion strategy to improve the tracking accuracy. The proposed tracking model was evaluated in detail on three datasets: GTOT,RGBT234 and LasHeR.Experimental results demonstrate that MMFFTN improves the success rate and precision rate by 3.0% and 4.7% ,respectively comparedwith the current advanced Transformer-based tracker ViPT. Compared with the Transformer-based tracker SDSTrack,the success rate and accuracy are improved by 2.4% and 3.3% , respectively.

Key Words: RGB-T tracking;transformer;channel feature fusion;cross-modal feature fusion

1引言

近年来,运动目标跟踪作为计算机视觉领域的基础任务之一,在机器人[1]、无人机任务、自动驾驶汽车、视频监控2等多个领域展现出了广泛的应用价值。(剩余17900字)