基于多尺度时序建模与动态空间特征融合的视频摘要模型

打开文本图片集

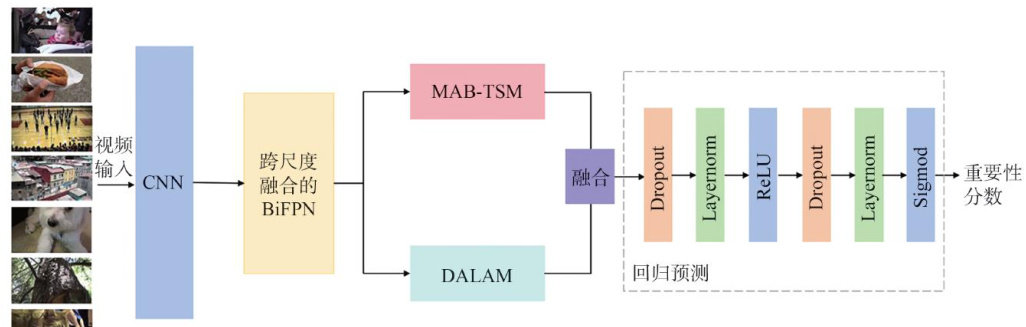

Abstract: Aiming at the problems of insufficient multi-scale temporal modeling and local feature modeling in video summarization tasks,this paper proposes a video summarization model that combines multi-scale time shift and deformable localattntion mechanism.Firstly,a multi-scale adaptive bidirectional time shift module (MAB-TSM) was designed. Through learnable dynamic bit shift step size prediction and multiscale dilated convolution,adaptive modeling of video long and short time series dependencies was achieved. Secondly,the deformable Local Atention Module(DALAM) is designed,combined with the dynamic video segmentation strategy and the adaptive sampling position adjustment mechanism,to reduce the computational complexity while enhancing the refined feature expression abilityof the local key regions. In addition,theBiFPN network for cross-scale fusion is improved.Across-scale attention enhancement module is introduced on the basis of BiFPN to enhance the complementary expresson of multi-scale features. The proposed model was subjected to multiple experiments on the SumMe and TVSum datasets. The F1 scores of the model in the canonical mode reached 56.8% and 62.6% respectively,which were superior to the existing methods.Moreover,the Kendallrank correlation coefficient and Spearman rank correlation coeffcient reached O.153 and O.2OO respectively,demonstrating excellnt consistency. The experimental results prove the accuracy and effectiveness of the model in the video summarization task.

Key words:computer vision;edge detection;geometric figure;curve fitting;subpixel

1引言

随着视频数据数量和内容的爆炸式增长,视频摘要任务已成为计算机视觉与视频分析领域的重要研究方向。(剩余20272字)